I don’t know what the Bose QC35-ii is doing: the Action button refuses to do anything unless it’s sure it’s talking to either Google Assistant or Alexa (no Siri mentioned in the app, interestingly).

I can’t get the 2021 version of the Star Trek TNG Bluetooth Combadge to trigger anything when connected to a Linux machine. The regular press is triggering KEY_PLAYCD and KEY_PAUSECD, thus mapping onto the relevant X events and interacting well with my desktop’s media players (particularly Chrome) — but doublepress, which normally activates Siri on my iPad, sends no input device events on the relevant /dev/input/event* special file. There’s just no traffic.

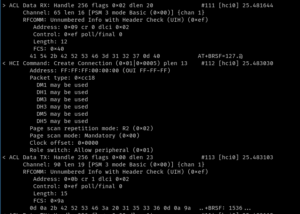

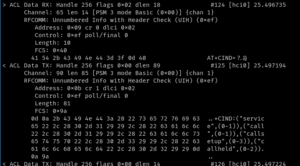

btmon is an interesting discovery, and it pointed me in the direction of the world of AT commands flowing as ACL Data on my local hci0 device. Many of the ones flowing are documented on Qt Extended’s modem emulator component documentation from 2009: it starts with the combadge sending AT+BRSF and seeing a response, then sending AT+CIDN and getting and response, and so on and on and on.

If I am reading everything right, the values returned are decimal numbers representing a binary mask. btmon output seems to indicate the combadge (‘hands-free’ device) claims it supports 127 (i.e. all 8 functionalities in the Modem Emulator docs), and the desktop (‘audio gateway’) says it supports 1536, which is binary 110 0000 0000, meaning the only bits that are set are in the reserved range from the perspective of the 2009 Modem Emulator documentation.

A list of flags can also be found in 2013 bluez test for HFP. Over there, one of the formerly ‘reserved’ bits is specified as being AG_CODEC_NEGOTIATION, but we can luckily find the other one in ChromiumOS’s source code: inside something called adhd (apparently, ChromiumOS Audio Daemon) and its cras component’s server part, the constants are in cras_hfp_slc.h. So, the other bit the desktop claims to support is AG_HF_INDICATORS, which also has nothing to do with remote control.

That source code also indicates we can read the Hands-Free Profile specification, the latest one being version 1.8 available on Bluetooth.com.

So, if I am interpreting everything correctly, the combadge says it supports “everything”, but the desktop doesn’t tell it back that it knows what voice recognition is. No wonder we’re not seeing any traffic.

So, we don’t quite need to support Apple-specific HFP commands such as AT+XAPL (bluetooth accessory identification), AT+APLSIRI (confirming the device supports specifically Siri) or AT+IPHONEACCEV (sharing battery level), which is nice. Both of the platforms documented by the combadge’s marketing materials and the manual (Google Now i.e. Assistant and Siri) document they support AT+BVRA from the Hands-Free Profile specification; see Google Assistant’s “Voice Activation Optimization” document for Bluetooth devices, as well as the “Accessory Design Guidelines for Apple Devices (release R16 talks about this in section 30.3.1).

Instead, it looks like we mainly need to trick the desktop to respond to combadge’s AT+BRSF request with a bitmask that includes the voice recognition bit, and move on from that, hoping the combadge starts emitting AT+BVRA, and that we can easily programmatically capture that!

But that’s a topic for another post.

–

via blog.vucica.net

Did you end up progressing on this? I want to set up some scripts to run when I press that button but have found zero docs on how to do so on Linux.

Given the rise of LLMs and such, I used them to probe a little bit around the relevant codebases, but have tried nothing they generated yet. (With some guidance, one output got very close in resembling a legitimately implementable approach.)

I am pretty sure that the 2025 approach would involve Pipewire and Bluez handing over some control to a DBus service, either something resembling Ofono or hfpd interfaces, but I have nothing I actually tried and can summarize in an article. I don’t recall the specifics, but I think rather than just flipping the flag and letting Pipewire/Bluez handle the additional AT command traffic, I think Pipewire or Bluez swaps in a different implementation of handsfree profile when the relevant DBus service is present. (I don’t recall how they detect if it is “present”: whether it’s just activation based on an interface, or whether the service has to tell Pipewire/Bluez, or whether reconfiguration is required.)

Another approach, of course, would be to patch Pipewire to flip that bit without involving DBus, and then dealing with followup traffic would be the next issue. It may be slightly cleaner than requiring a “full” (how full?) HFP implementation.

No matter what, I suspect a fun complete approach would involve creating a virtual input device (maybe somehow just hacking around libinput, maybe a full virtual USB HID device), therefore hiding the implementation detail of this not being an actual keypress, but I did not even get far enough to reliably get the “command” event that would be injected somewhere else.

To expand on the previous reply: this matches both what I researched on-and-off before LLMs were a big thing, and it matches what I previously got an LLM to output for me: https://deepwiki.com/search/i-have-a-bluetooth-headset-tha_fe8e14f7-7d35-407f-be19-137ed97aff01

It’s a decent start: it documents existence of native backend, of ofono backend and of hsfhfpd backend configurable via bluez5.hfphsp-backend (which is seemingly documented in pipewire-props(5)).

I hope to try this end-to-end — some time when I’m not on my phone on a train, of course.

One more follow-up with a previous query I did, with a few followup questions. Again, I have not tried this yet; no point in trying to phrase this in the main body or in a new post until I do so: https://deepwiki.com/search/whats-the-decision-making-proc_6ea7799e-06c0-46fd-a552-5a868598b03a

(I suppose I'm also using this comment section as a dumping ground for the artifacts I collected involving this. I'd also like to point out: I'm not a fan of LLMs; I have concerns about the trustworthiness of their outputs; I have ethical concerns about the training approaches LLMs seem to have taken — and how literature, articles and openly available code were ingested. But since this is _very much_ not an important project for me, just a curiosity, I don't mind using what's available for free to me. After all, LLMs probably also ingested public code and articles I wrote.)

Questions asked and main takeaways:

1. "What's the decision making process in each of the bits sent in response to AT+BRSF command?"

– "The AT+BRSF command is part of the HFP Service Level Connection establishment where the Hands-Free device sends its supported features to the Audio Gateway (AG). backend-native.c:886-929"

– "ModemManager Features: Additional features are added based on ModemManager availability through mm_supported_features(). backend-native.c:926"

2. "Can we get it to send the 'voice command' support bit? Perhaps via mm_supported_features?"

– "Looking at the current implementation, the mm_supported_features() function only returns two features: modemmanager.c:721-724"

– "The voice command feature bit you're referring to is SPA_BT_HFP_AG_FEATURE_VOICE_RECOG, which is defined in the HFP AG feature enum: defs.h:247"

– "Currently, this feature is not being set anywhere in the codebase. To add voice command support, you would need to modify the mm_supported_features() function[…]"

– "Adding the voice command bit would signal to HF devices that the Audio Gateway supports voice recognition activation (typically through the "Hey Siri" or "OK Google" commands). However, you'd also need to implement the actual voice recognition command handling in the AT command parser to make this functional, not just advertise the capability."

So, that's that for backend-native.

3. "Is there any backend plugin that supports sending SPA_BT_HFP_AG_FEATURE_VOICE_RECOG?"

– "Looking at the PipeWire Bluetooth backends, none of the current backend plugins support sending SPA_BT_HFP_AG_FEATURE_VOICE_RECOG." "The codebase has three HFP/HSP backend implementations:"

– "Native Backend: This is the main backend that handles HFP AG functionality directly. backend-native.c:926 The voice recognition feature is defined in the enum but never set anywhere in the native backend implementation. defs.h:247"

– "ModemManager Integration: The mm_supported_features() function only returns call rejection and enhanced call status features, not voice recognition. modemmanager.c:721-724"

– "OFono Backend: Referenced in the backend definitions but would be a separate implementation for telephony integration. defs.h:857-867"

– "HSPHFPD Backend: Also referenced as an alternative backend but would delegate HFP handling to an external daemon. defs.h:869-879"

– "The native backend is the most complete implementation and is where you'd need to add voice recognition support. Currently, it only sets codec negotiation, HF indicators, eSCO S4, and ModemManager features in the AG feature bitmask. backend-native.c:925-927"

– "To implement voice recognition support, you'd need to modify the native backend to include SPA_BT_HFP_AG_FEATURE_VOICE_RECOG in the feature bitmask and implement the corresponding AT command handlers (like AT+BVRA) for voice recognition activation/deactivation."

4. "How would use of backend_ofono_new work, given there is dbus mentioned? Same for hsphfpd? How does such a backend supply those bits?"

– "Both backends are D-Bus-based implementations that delegate HFP handling to external services:"

– "OFono Backend"

– "The OFono backend connects to the org.ofono D-Bus service for telephony integration. backend-ofono.c:857 It registers itself as an HF Audio Agent with OFono during initialization. backend-ofono.c:648-710"

– "The backend registers supported codecs (CVSD and optionally mSBC) with OFono, but does not directly control AG feature bits. backend-ofono.c:664-670 Instead, OFono itself acts as the Audio Gateway and handles the AT+BRSF negotiation. PipeWire's OFono backend only receives audio transport information via D-Bus when OFono establishes SCO connections. backend-ofono.c:141-182"

– "HSPHFPD Backend"

– "The HSPHFPD backend connects to the org.hsphfpd D-Bus service. backend-hsphfpd.c:869 It registers as an audio application with HSPHFPD and receives endpoint notifications. backend-hsphfpd.c:1179-1218"

– "Like OFono, HSPHFPD acts as the HFP Audio Gateway itself and handles AT command processing. backend-hsphfpd.c:1075-1085 PipeWire's HSPHFPD backend only manages audio transport setup and codec selection (CVSD/mSBC), not AG feature advertisement. backend-hsphfpd.c:868-901"

– "Feature Bit Control"

– "Neither the OFono nor HSPHFPD backends can directly supply SPA_BT_HFP_AG_FEATURE_VOICE_RECOG because they don't handle the AT+BRSF response themselves. The external services (OFono/HSPHFPD) control what AG features are advertised to HF devices."

– "To add voice recognition support:"

– "OFono: You'd need OFono itself to support and advertise voice recognition features"

– "HSPHFPD: You'd need HSPHFPD to handle voice recognition AT commands and advertise the capability"

– "Native Backend: Only this backend gives PipeWire direct control over AG feature bits backend-native.c:925-929"

– "Notes"

– "The D-Bus backends are designed for integration with existing telephony stacks that already implement HFP Audio Gateway functionality. They delegate the protocol handling to external services while PipeWire focuses on audio transport management."

———

First logical thing to do would be to try to use OFono, seemingly originally created by Intel and jointly announced by Intel and Nokia: https://git.kernel.org/pub/scm/network/ofono/ofono.git/about/ — the most recent copy I could find on GitHub is https://github.com/sunweaver/ofono (while I do not like monoculture and centralization of things on one "hub", many tools like Sourcegraph of Deepwiki seem to assume GitHub is the only VCS forge in existence). I've now triggered indexing on Deepwiki so we now have this to explore the code a bit: https://deepwiki.com/sunweaver/ofono (9fd5cb1aac65dda07b0e4b42b901a1a4c433daff)

I asked a few questions: https://deepwiki.com/search/whats-the-decision-making-proc_7eef58ad-33d3-43d7-9cd5-30dda5d0e061 and it looks like this is the best bet for a 'full' implementation: AT+BRSF bitmask already includes HFP_HF_FEATURE_VOICE_RECOGNITION bit set to true (in drivers/hfpmodem/slc.c), and bvra_notify() is registered as a callback for AT+BVRA (drivers/hfpmodem/handsfree.c). This invokes ofono_handsfree_voice_recognition_notify() which sets property VoiceRecognition on DBus "org.ofono.Handsfree" interface meaning signal "PropertyChanged" gets triggered. This all works mainly by ofono taking over in bluez as the handler for this profile so it gets to control how the profile gets registered in bluez and what SDP announcements it should be making (plugins/hfp_hf_bluez5.c). But the LLM takes the opporunity here to say something hallucinated "The SDP profile registration also includes voice recognition in the advertised features. hfp_hf_bluez5.c:763-778" — which is not true, HFP_SDP_HF_FEATURE_VOICE_RECOGNITION (which appears in src/hfp.h) is not set in connect_handler(). Upon asking for clarification, the LLM recognized the mistake and said "This means oFono advertises voice recognition capability during the AT command exchange (AT+BRSF) but not in the initial SDP service discovery record." so either advertising in SDP record is not required, or I uncovered a bug. (Not bad, given I'm not actually trying to do anything with Ofono today.)

The next logical thing would be to try out whether github.com/pali/hsphfpd-prototype (d294d064) works with Pipewire as-is, and how to set it up. It seems to expose "org.hsphfpd" interface on DBus: https://deepwiki.com/pali/hsphfpd-prototype/3-d-bus-api-reference all implemented in a single .pl file, and it seems to have at least "audio agent" and "telephony agent" "examples": https://deepwiki.com/pali/hsphfpd-prototype/5.1-telephony-agent-example https://deepwiki.com/pali/hsphfpd-prototype/5.2-audio-agent-example

Regarding this implementation of the hsphfpd interface outside of Pulseaudio/Pipewire: While looking at this on the train today, I did notice author had some technical unhappiness with Pipewire in 2021: https://github.com/pali/hsphfpd-prototype/issues/11 (which may have been resolved in 4 years, unclear) and this itself pointed at some social unhappiness with the way attempted patches to Pulseaudio were handled: https://github.com/EHfive/pulseaudio-modules-bt/issues/115#issuecomment-739032966 (which indicates inclusion of some work into Pipewire); some patches were originally sent here: https://gitlab.freedesktop.org/pulseaudio/pulseaudio/-/merge_requests/227#note_720242 (it also links to issue 13 which has been deleted).

Therefore, it is not clear to me how technically useful it is to integrate the prototype "org.hsphfpd" into a pipewire-based implementation, or how ethical. However: assuming it is possible to have a much smaller daemon implementing this interface and do just the bare minimum to hook up Pipewire and Bluez with something that can deal with AT+BVRA… well, that _might_ be a better option than bringing in all of OFono, since (in my case) we are not actually building a Linux phone, but just using this on desktop/laptop/RPi/Steam Deck.

…or perhaps once I try it I'll discover OFono is not that intrusive and maybe it's ok.

—————

In any case, if you do make any further discoveries, manage to make this work, or even write a tutorial before I get around to it — please leave a note here. Even if you merely make it work, leave at least a one-liner to let me know: I am curious. (Or if you can just enumerate your stack that made it work, that'd be even better. But just a short note that it works would be appreciated.)

To be clear, my personal interest and scope of this is just in getting AT+BVRA trigger into userland software unaware of the button press on my Bluetooth Combadge being anything special. Software should just see a keycode, or a libinput event, or (if nothing else) a DBus signal. If audio continues to work (taking mic input from the combadge, playing output to the combadge), that's even better.

How it happens is not as important once it works.