So you’re Apple.

So you’re Apple.

And not the magical, mythical, always inspired Apple. You’re Apple that has been a market leader for a while, and is now being overtaken by new devices. You’re the Apple that is clinging to a philosophy that has served you well: don’t make your customers inconvenienced, and keep backward compatibility as long as possible. Change stuff when needed, and do so gradually.

Try not to break stuff

Developers often get this treatment from Apple. You are gradually eased out of your old practices and “encouraged” to adopt new ones. There are rarely any sudden changes. Even UDID was not suddenly thrown out from the OS; it was first deprecated, and then thrown out of iOS6 after months and months of easing developers into new practices. I can’t really remember significant mistreatments of developers, aside from weird App Store rejections and insistence on quite limiting sandboxing model. (Even there, line had to be drawn somewhere in order to make everyone happier and enforce better development practices.)

Aside from developers, Apple also doesn’t want end users, its primary customer base, to be inconvenienced. iOS 6 broke several things in several apps I work on when I first launched them in the Simulator, and I completely understand what went wrong and that most of what Apple changed is for the better. (I’m unsure how is force-crashing the app when no auto-rotation target is successfully calculated counts as a change for the better, though.)

However, in order not to break existing apps, Apple seems to have employed the model from OS X where the behaviors occasionally change based on the SDK the app was built on. I’m pretty convinced that several classes that ship in UIKit are loaded differently based on whether or not iOS 6 SDK was used in compiling the apps.

Don’t reject change

However, there’s a thing that Apple does that is also easy to notice: they are not resistant to change. Microsoft has tried very hard to keep backward compatibility as far back into the history as possible. Apple has taken many opportunities to shed itself of legacy code and practices. Mac OS to Mac OS X was a chance to throw away some legacy Macintosh Toolkit practices and establish Carbon as a nearly-Toolkit-but-not-quite replacement, with somewhat better structured code. PowerPC to Intel transition was a chance to break some code, but not a lot. Intel to Intel 64-bit was a chance to throw away most of Carbon and to introduce a new, but incompatible, runtime for Objective-C that is extended to this day without breaking existing apps. (One of major things this new runtime brought was non-fragile ABI, which basically means Apple can add stuff into Objective-C classes without breaking stuff.)

Apple has recently deprecated development for armv6 devices, and with Xcode 4.5 and iOS 6 decided to completely do away with support for it. This is surely inconveniencing some developers who, like me, up until then used iPhone or iPhone 3G as their main iOS device, and inconveniencing users who are no longer going to be able to get new versions of apps. And unless they backed up the .ipa files, they won’t be able to install versions that did support their device.

They are not afraid to change stuff. They are careful to ease developers in. And the myth that Apple magically “knows” what users want has been dispelled recently. And of course, it’s only logical that Apple, its engineers, as well as its management, do live in the same world that we do.

They see what people want because they observe. They may end up deciding on price point based on profit margins, profit now instead of profit later, and they may end up on deciding what goes into the device based on what can they can realistically construct best. But they definitely, definitely do watch what people want.

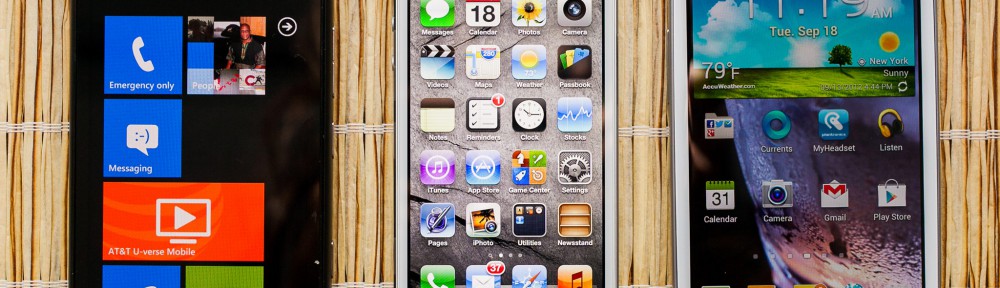

People want bigger devices

Remember 2007 and 2008, even 2009? Remember how people complained about iPhone’s size?

Jump to 2011 and 2012. iPad has come, other tablets have come, and people have seen that they, in reality, want bigger devices. Something rare has happened: Apple has missed what people want. (G4 Cube was another instance, and their insane prices in ‘less wealthy’ countries may be other instances.)

A controversial subject in ‘certain’ countries in the world, evolution theory, does in fact work. Perhaps we cannot go to the past to see that Earth was, in fact, inhabited by life forms that changed in response to environmental changes and that mutated; perhaps denialists can produce odd explanations for the abundance of evidence we have in favor of evolution theory.

But ‘genetic algorithms‘ definitely do show that mutation, recombination, and overall improvement in small steps by extolling beneficial adaptation, ignoring harmless ones, and shunning the harmful ones actually does work.

Android has flooded the market and evolved.

Adaptation

With Android, there were a lot of mutations. It has started with a poor product, and slowly grown. Various screen sizes, various input devices, various launchers, using stock versus using modified OS — all these things were mixed, matched, recombined, changed, and in the end we got devices like Galaxy S3 and Galaxy Note.

I have not owned an Android device, nor do I foresee that in the nearby future I will. I have played only a little while with several tablets, and my sister has an Android phone. I’m utterly unimpressed by the environment itself. I’m occasionally impressed by what openness has allowed, while at the same time unimpressed by flooded Android Market Google Play, with applications not being vetted, with applications that suspiciously request far more permissions that they need, with abhorrent and unnecessary NFC…

Yet a good point is being made.

All these changes, mutations, mixes and matches have produced several outstanding devices, and widened our horizons.

Android may be an initially stolen (and an initially poorly stolen, at that) product. What amounts to industrial espionage by a certain former board member at Apple has produced a vibrant smartphone market that even Apple will learn from. Notification Center is one example where Android took the lead, where a developer for jailbroken iOS systems has created a Notification Center, and where he ended up being hired to replicate it at Apple in an even better way than he did on jailbroken 4.x systems.

(Note that iOS itself has taken a lot of, shall we say, ‘hints’ from the old Palm OS, from Pocket PC and Windows Mobile — primarily packing a lot of UI ideas into a highly animated and fluent, NeXT-related UNIX-based operating system.)

What’s the future bringing? Screen size changes!

Apple has done a great thing for developers when the retina screen came out. The change was so radical that they needed all developers to upscale apps to 2x their size. So they did the same thing Palm did and that sometimes Microsoft did: they simply said that a pixel is not a pixel is not a pixel, but that a pixel is instead a ‘point’. They did something different, too: they simply said that the upscaling will be solely at 2x rate, not 1.5x, not 2.5x. They also made use of sub-‘point’ precision easy (albeit occasionally inconsistent, if you dig deep enough — for example, CATiledLayer does some weird stuff).

However, it turns out that people also want screens of different sizes, aspect ratios et al. It turns out that even people who are hard-core Apple fanboys clamor for a bigger iPhone for easier reading, even though they immediately say that a bigger size would “not fit the hand as well as the current size”. (And I don’t agree, considering that — surprise, surprise! — people have different hand sizes, hence the current size does not fit as well.)

How would you bring different screen sizes?

So on OS X, developers have been trained for a long time to use the “struts and springs” model to make the user interface elements (views, we call ’em) respond to size of their parent view. For example, when a window resizes, many sub-elements resize as well.

iOS also has the struts-and-springs model available. Either in Interface Builder or manually via the -setAutoresizingMask: method, developer can quite easily make the UI resizable.

Except many people never bothered more than absolutely necessary; for example, due to designing without navbar when the navbar could actually appear in runtime. They never had to, because — due to inflexibility of the window — they never had to play with multiple screen sizes. One of the only two places where the screen size changed radically were the transition to iPad, which in itself required a radically different user interface model (and use of different nib-files was VERY encouraged).

The other place where window and view sizes varied was the very, VERY underused TV-out display support for every UIWindow — which existed since iOS 3.2.

Training developers to use autoresizing masks

So if you ever wanted to train people to use autoresizing masks in preparation for a variable screen size, how would you approach the problem, Apple-style?

Obviously, you first need to deprecate the old-style development; that is, iOS app development with minimal autoresizing support. If you can’t actually force devs to use it (and with autoresizing masks, you can’t), then nudge them in the right direction.

One way is to explore what else is blocking good use of autoresizing and make sure you fix that problem. In case you can’t actually fix the problem elegantly, still provide the solution as a way to nudge developers to notice you really, really want to emphasize autoresizing.

Final step involves actual introduction of a device that uses a radically different screen size, without inconveniencing users by blocking operation of old apps.

What are the first two steps?

First step involved release of iPhone 5. This was a definite wake-up call that you really, really need to iron out those few places where you managed to mess up autoresizing. You don’t need to fix anything on the x axis, but y axis is fair game. On launch day of iPhone 5, you realized that the rumors were true, and whatever stuff you read about Apple never changing the resolution except by scaling by powers of two were — wrong. You, the dev, now needed to adapt to everything other platforms already had to do: resolutions change, and not just by scaling in powers of two. DPI (or, perhaps, PPP or pixels per ‘point’) do change in powers-of-two, but not the resolutions. In fact, you realize that you were groomed for the resolution staying fixed at 480×320 points, but that suddenly that’s no longer true.

Now you are not in the world of 480×320, but in the world of 586×320, and your every assumption is fair game.

However, if you don’t ship that one file called Default-568h.png (or, as Apple nudges you to call it, Default-568h@2x.png), you’re getting a pass. We won’t screw your app over just yet. This is just an introduction… but you’d better adapt! You don’t want black bars on top and bottom of user’s device. And think wider* too: iPad users get the black box around the iPhone apps as well as lower resolution of the app. Don’t you think iPhone 5 users deserve better, just like iPad users?

* See what I did there?

What’s the second step? Something called “automatic layout” being force-fed upon developers by default in Xcode 4.5. No matter that it’s as usable as a brick — it is quite powerful, and if the dev takes the time to correctly build the UI using automatic layout, then the localization becomes quite better. It’s completely unusable in the current state, though, so I suspect a lot of devs (especially those who don’t widely localize their apps) will stick with the old-but-reliable struts-and-springs model.

As I said, the second step may not involve creation of something usable. It does involve nudging devs in the right direction.

Future devices

So the third step is, of course, wholehearted acceptance of what Apple already knows, but developers may only suspect: variable resolution displays.

Apple has heard folks who clamor about Galaxy Note’s size. Apple has realized that there’s a sweet spot between iPhone and iPad screen sizes.

iPad mini is a further proof that Apple is willing to play with screen sizes. It didn’t play with the resolution yet, but I feel it’s only a matter of time before Apple releases a non-4:3 iPad. Perhaps with a built in phone.

Perhaps a phone which can also run UIUserInterfaceIdiomPad applications adapted for the new resolution?

Maybe we’ll also see a Default.png mini-revolution, where we will specify to Apple how they should resize the Default.png image, or allow devs to run some very simple Core Graphics code upon app installation in order to draw the image, instead of shipping various images for various resolutions and orientations? For a completely universal app in complete compliance with best practices for Default.png, an app that is iPhone 5 and iPad 3-aware which still supports iPhone 3GS — you have to ship no less than 5 images. If my prediction comes true, it’s only going to get worse.

I have absolutely no idea how Android solves the problem, nor am I really interested.

How hard is it to adapt?

I don’t think that adapting will be too hard. What one must take into account is that, all of a sudden, we will have to make wider use of resizable-image-with-cap-insets support introduced in iOS 5, and enhanced in iOS 6. I suspect Interface Builder will be enhanced in order to permit easier creation of such images (which currently have to be constructed in source code). Aside from ‘just’ skinning buttons, although even that was avoidable by having the app’s artist export each and every button with static text already painted on it, we’ll find out that suddenly there are a lot more backgrounds we have to skin, including the table view backgrounds.

But I’m almost sure that this revolution will happen.

If anything, Scott Forstall leaving Apple is probably going to make this easier. Who knows — perhaps he was one of the people pushing for never changing the UI resolution, based on his judgement of appropriateness of the platform for withstanding such a change. Perhaps he didn’t even care, and the decision was made by another manager or engineer.

Whatever the case — the point of this entire rant is that varying screen resolutions are coming. Perhaps not as wildly as was the case with Android, but we will see them change. We may even see a sort of convergence of iPhone and iPad, with iPad pushing out the iPod touch from the game.

It would be in line with lineup simplification that Apple likes to do every couple of years. So just as the Mac Pro is probably about to die, perhaps iPod touch will die, too.

A possible future lineup?

| – |

Professional |

Consumer |

| Mobile |

iPhone |

iPad |

| Desktop |

iMac |

Mac mini |

| Portable |

MacBook Pro |

MacBook Air |

| TV |

Apple TV HD |

Apple TV |

What do you think?